Many of the risks attached to sensitive consumer data are directional in an important sense. If I start with data and give it to you then I retain some liability for your bad behavior but there is no similar way for me to get you in trouble. Risk generated by sharing data is thus asymmetric. It is both true that public relations liability happens to work out this way and that this has been the design of data privacy regulations new and old. This is perhaps only a manifestation of our everyday common sense, as we are often upset with those who leaked our secrets and not with those who happened to learn them.

An excellent case study recently in the newspaper was the data breach at laboratory company Quest Diagnostics. One might put “at Quest Digagnostics” in quotation marks because this was how the headlines were typically written, yet the breach actually occurred at the debt collection agency AMCA. Quest had hired this firm, handed over data to them to enable their work, and it was actually AMCA that lost the data.

It is really little consolation to Quest, though, that it was a partner who stumbled. Quest is a much larger company, directly consumer-facing and visible, that patients are aware is holding personal data about them. Not much of anyone has heard of AMCA, and it is inevitable that the lion’s share of the public relations damage is heaped upon Quest. Furthermore, Quest is explicitly liable under HIPAA regulations for AMCA’s failures.

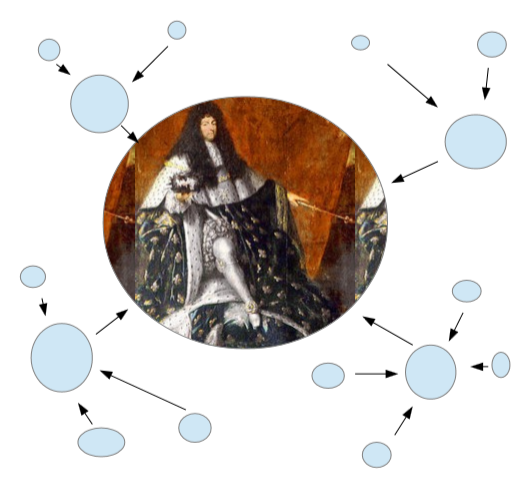

To be a firm that generates regulated data, like Quest, is tough sledding in 2019. Such firms have many legitimate reasons to share data, and each generates for risk that the partner does not equally share and is not likely to “feel” as well as one would hope. You might visualize the difficulty of this situation using a common concept in computer science and mathematics: the directed acyclic graph - a bunch of dots and arrows that point in some direction but do not form loops. Data propagates along the arrows and risk propagates back, and to be a company with all arrows out (lab testing companies, hospital networks, and any business that generates medical data are roughly in this boat) is to carry all the risk without much ability to manage it… at least so far.

Technologies like homomorphic encryption and zero-knowledge proof have great potential to change this situation, as they remove the need for partners to truly hold the data. Quest might have shared an encrypted dataset with AMCA and then used a cryptographic process to regulate the information they extracted from it - presumably only the information AMCA needed. It is debatable whether a breach of encrypted data is really a breach, especially when it is unlikely anyone will be able to do anything useful with that encrypted data. There is much to be gained, as there is not only the risk of actions taken to consider but the analytics value lost to data siloing undertaken avoiding that risk.